Case study: Parallelizing a particle-based flow simulation

-

c/o Jessica Hausberger

c/o Jessica Hausberger

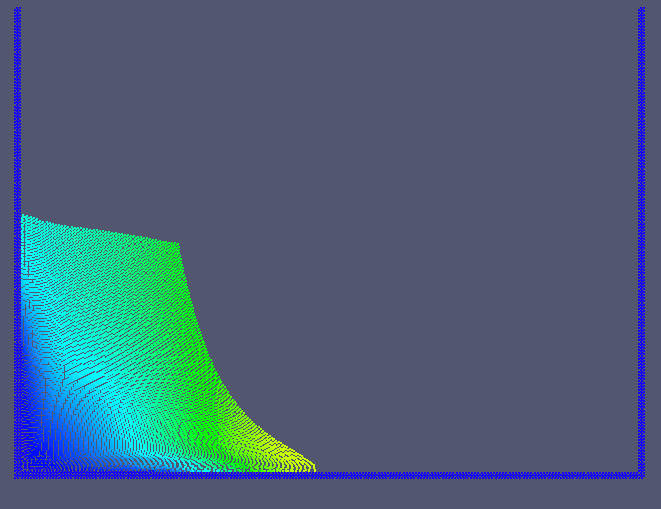

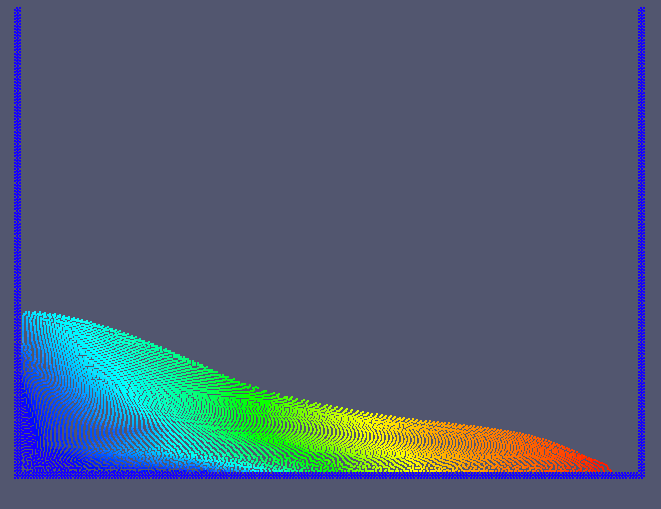

Particle-based simulation (SPH) of the dambreak-problems. The method is particularly suited for flow with complex geometry or free surfaces.

Particle-based simulation (SPH) of the dambreak-problems. The method is particularly suited for flow with complex geometry or free surfaces.

An academic client developed an inhouse code (C++) for simulating complex flows. The employed method (SPH — smoothed particle hydrodynamics) is suited especially for free surfaces and other problems with complex geometry. The characteristics of the simulated flow processes resulted in very small timesteps and thus very long run times. Therefore, the code needed to be parallelized, the target platform was an Intel Xeon multi-core CPU with 12 cores.

Analysis

For efficiency reasons, the SPH algorithm computes particle interactions in a pairwise manner. A naive parallelization of the summation of the interactions would lead to massive data conflicts on a shared memory machine and thus to wrong results. Therefore, more had to be explored.

As it was not clear a priori, which strategies would be performing best, we developed several parallelization strategies, for use at several places (loops) throughout the program.

-

c/o Jessica Hausberger

Blocking is an improved approach for parallelizing the SPH algorithm. Blocks with the same number (color) can be processed independently, needing no synchronization of data writes.

c/o Jessica Hausberger

Blocking is an improved approach for parallelizing the SPH algorithm. Blocks with the same number (color) can be processed independently, needing no synchronization of data writes.

Implementation

We chose OpenMP as parallel programming model, as it is widely supported and portable. Through abstraction and use of generic programming we could limit the implementation of each strategy to a single generic function, which could be used with appropriate parametrization at the various hot spots of the program. This enabled us to switch between the different parallelization approaches easily and to compare their performance. In addition, the generic approach resulted in a decoupling of the algorithmic code from the parallel framework. Thus, switching from OpenMP to C++11 thread would be possible with just a few localized changes.

Results

Several parallelization alternatives were investigated and compared. While the simple methods using explicit synchronization via atomics achieved alread a speedup of 8x on 12 cores, the best, algorithmically more sophisticated approaches achieved a speed-up of 11x, using hyperthreading with 24 threads the speed-up increased to almost 15x. These strategies exploit the locality properties of the problem and algorithms and can in some cases do without any fine-grained synchronization.

The results were presented at the conference "Parallel 2013".